Krishna Murthy Jatavallabhula

World Models for Robots

I am an AI research scientist at Meta and an incoming assistant professor with the CS department at Johns Hopkins University.

I strive to build full-stack robotic systems that perceive, reason, and act with human-level efficiency, ultimately surpassing them. My work lies at the perception-action interface, tackling both how robots should represent the world around them, and how they use it for action. This draws inspiration from several adjacent fields, including computer vision, machine learning, and cognitive science.

Previously, I was a postdoc at MIT CSAIL with Antonio Torralba and Josh Tenenbaum. I completed my PhD at Universite de Montreal and Mila, advised by Liam Paull. My work has been recognized with PhD fellowship awards from NVIDIA and Google, and a best-paper award from IEEE RAL.

News

| Jun 13, 2025 | Long overdue webpage update, featuring work through mid-2025. |

|---|---|

| Jun 13, 2025 | By Fall 2026, I will join the CS department at Johns Hopkins University as an assistant professor. |

| Sep 16, 2024 | I joined FAIR, Meta as an AI research scientist. |

| Sep 14, 2024 | I will serve as an area chair for CVPR 2025. |

| Sep 13, 2024 | I completed an eventful 2.5-year postdoc stint at MIT CSAIL. |

| Jun 1, 2024 | Serving as OpenReview chair for CoRL 2024 |

| Mar 1, 2024 | Speaking at the UMD/Microsoft future leaders in robotics and AI seminar series |

| Jan 29, 2024 | 6 papers accepted to ICRA 2024. |

| Jan 23, 2024 | Serving as associate editor for IROS and RA-L. |

| Sep 28, 2023 | Another webpage update, featuring new work, including ConceptGraphs. |

| Feb 12, 2023 | Long overdue webpage update, including the featured Conceptfusion work. |

| Mar 15, 2022 | I moved to MIT to start my potsdoc with Josh Tenenbaum and Antonio Torralba. |

| Mar 11, 2022 | Got my PhD with grade: exceptional! |

| Feb 2, 2022 | Serving as associate editor for IROS 2022 |

| Sep 15, 2021 | Organizing workshops Diff3D ICCV 2021, and the PRIBR at Neurips 2021. |

| Sep 5, 2021 | Teaching the realistic/advanced image synthesis class at McGill university (Fall 2021). |

| Jan 22, 2021 | Awarded a Google PhD fellowship (declined) |

| Dec 21, 2020 | Organizing the rethinking ML papers workshop at ICLR 2021 |

| Dec 1, 2020 | Honored to have received an NVIDIA graduate fellowship for 2021-22 |

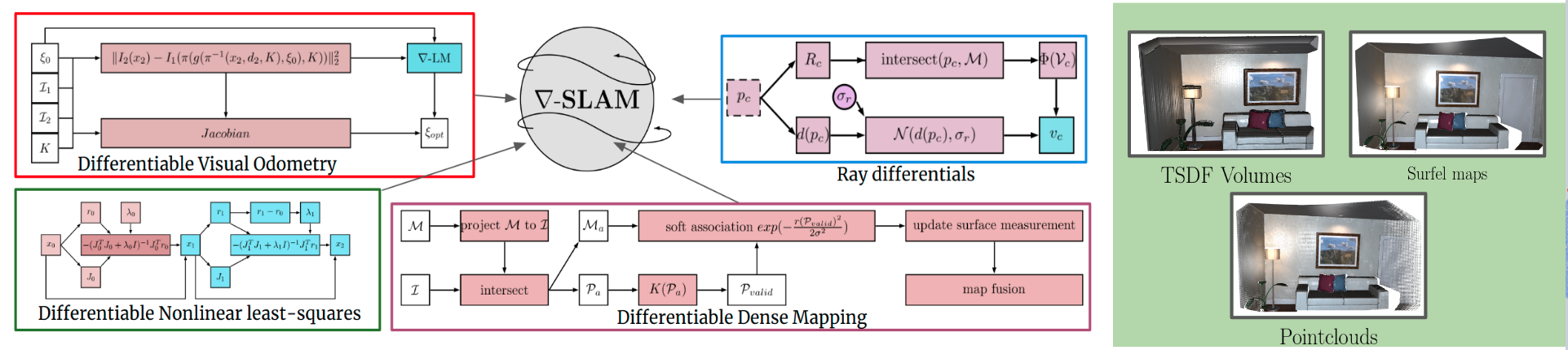

| Nov 10, 2020 | gradSLAM is available as an open-source PyTorch framework here |

| Sep 3, 2020 | Organizing the robot learning seminar series at Mila |

| Sep 1, 2020 | Organizing the differentiable vision, graphics, physics workshop at Neurips 2020 |

| Jul 6, 2020 | Selected to the RSS pioneers cohort for 2020 |

| Jun 5, 2020 | Our paper, MapLite, named best paper, IEEE RAL 2019. |

| Feb 12, 2020 | Our paper on fully differentiable dense SLAM will be (virtually) presented at ICRA 2020 |

| Nov 14, 2019 | Released NVIDIA Kaolin: a 3D deep learning library |